U.S.-based Google Research scientist Dr. Mercy Nyamewaa Asiedu has urged responsible innovation in artificial intelligence (AI), warning that accelerating developments in generative AI and emerging superintelligent systems must be carefully assessed before large-scale adoption, particularly in healthcare.

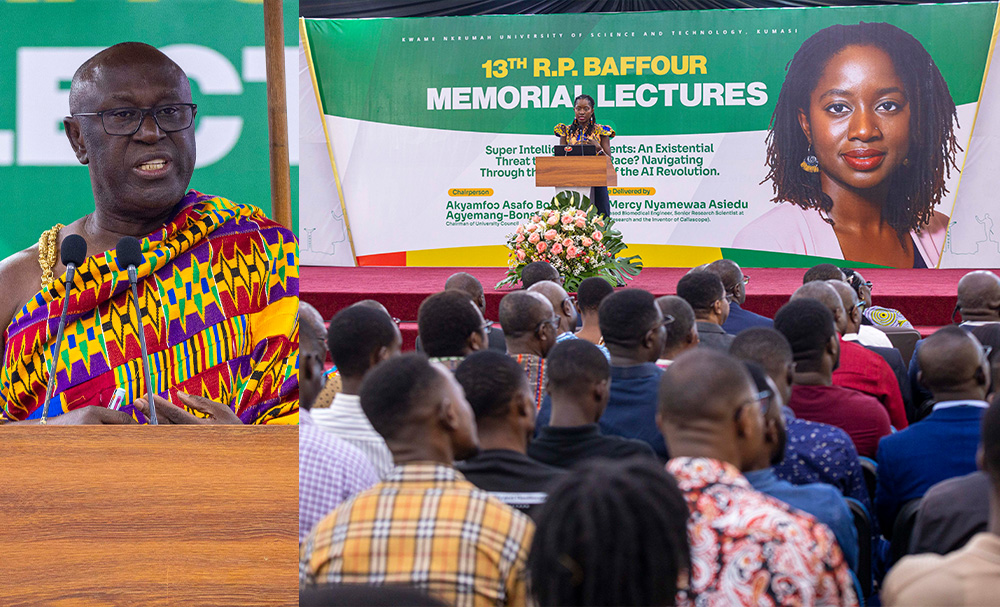

Delivering her second lecture at the 13th Edition of the RP Baffour Memorial Lecture Series, Dr. Asiedu spoke on “Case Studies of AI Applications and the Potential of Superintelligence: Benefits, Risks, and Challenges.”

She outlined four critical concerns while encouraging participants to explore new research frontiers.

“Generative AI models can produce incorrectness. There are so many hallucinations. So, if it’s been used in health areas, it must be carefully assessed because inaccurate results could endanger patients,” she said.

She added that AI risks exacerbating inequities if training data fails to reflect diverse contexts. “Bias and local performance need to be studied before AI is deployed,” she advised.

Dr. Asiedu also cautioned against deploying systems whose internal processes remain opaque.

“Many of these models still operate as a black box,” she said, describing limited transparency as a barrier to accountability in healthcare.

Despite the risks, she encouraged participants to pursue impactful research.

“AGI and Superintelligence is new and currently still a hypothesis under research development, so you can conduct further research on that and make an impact by bringing back your findings to your communities,” she said.

Council Chair Akyamfour Asafo Boakye Agyemang-Bonsu echoed the call for responsible adoption, urging the Medical and Dental Council to advocate for AI-assisted tools to reduce medical errors.

“We may have to request through our policy dialogue with government that any AI tool that will be deployed in the country for medical applications must go through algorithm impact assessment to validate whether the data that has been used for the development of that AI model is in sync with our local model data,” he said.

He stressed the importance of multidisciplinary collaboration at KNUST to support the development of local models. “It is very critical that we always need to think interdisciplinary to make AI more useful and helpful for our communities,” he charged.

The Council Chair further encouraged faculty members, students and the public to embrace AI. “Emerging technologies are coming, and we need to go with them, if we don’t, we will be left behind,” he said.